Few scholars who truly consider themselves to “Digital Humanists” will disagree with the following statement: A major goal of Digital Humanities is to enable technology to analyze literature the same way as a human does.” After all, if such a condition were to be satisfied, then the work that literary scholars do over the course of a few days of reading books would be doable infinitely faster. As opposed to analyzing a few texts per week or month, a computer could theoretically do the same amount of work in a fraction of a second – that is – assuming that a computer can arrive at conclusions of the same breadth and insight as a Ph.D. in English Literature (which I believe to, with time of course, be true).

The Text Encoding Initiative

TEI, or the Text Encoding Initiative is advertised as a set of “guidelines for the encoding of humanities texts.” Based on XML and HTML, TEI is an extensive library of categories and tags with which an encoder can markup a text. The tools can be customized but most are standardized and centered around denoting syntax, punctuation, key characters, and places within a piece of text. It’s quite prevalent in the Digital Humanities community but its ends don’t seem to justify its means. While Digital Humanities should be about efficiency, speed, and using technology to save time, encoding a text using TEI is a lengthy and manual process. The learning curve is quite steep, and the fruit of one’s encoding labor is often far removed from the initial effort put in as only advanced programs written by seasoned developers can get at the TEI data.

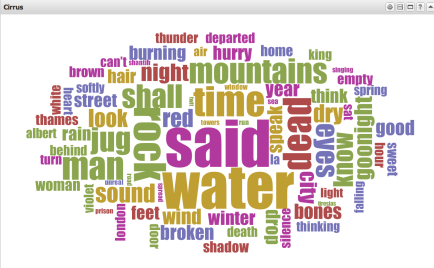

Example of a chunk of text with TEI markup in it.

Although it makes sense that a system be put in place mark a text up, TEI is simply too involving to be worthwhile. It’s designed to help a computer do close reading of a a story, but it itself requires close reading to be added to a work. Instead, Digital Humanists should be looking into new algorithms to automate the encoding as well as the text mining process. This way, the initial problem of making sense of the insurmountably vast corpus of written material that Digital Humanities sets out to solve can stay on a detour-free route towards its goal.